Collaborative

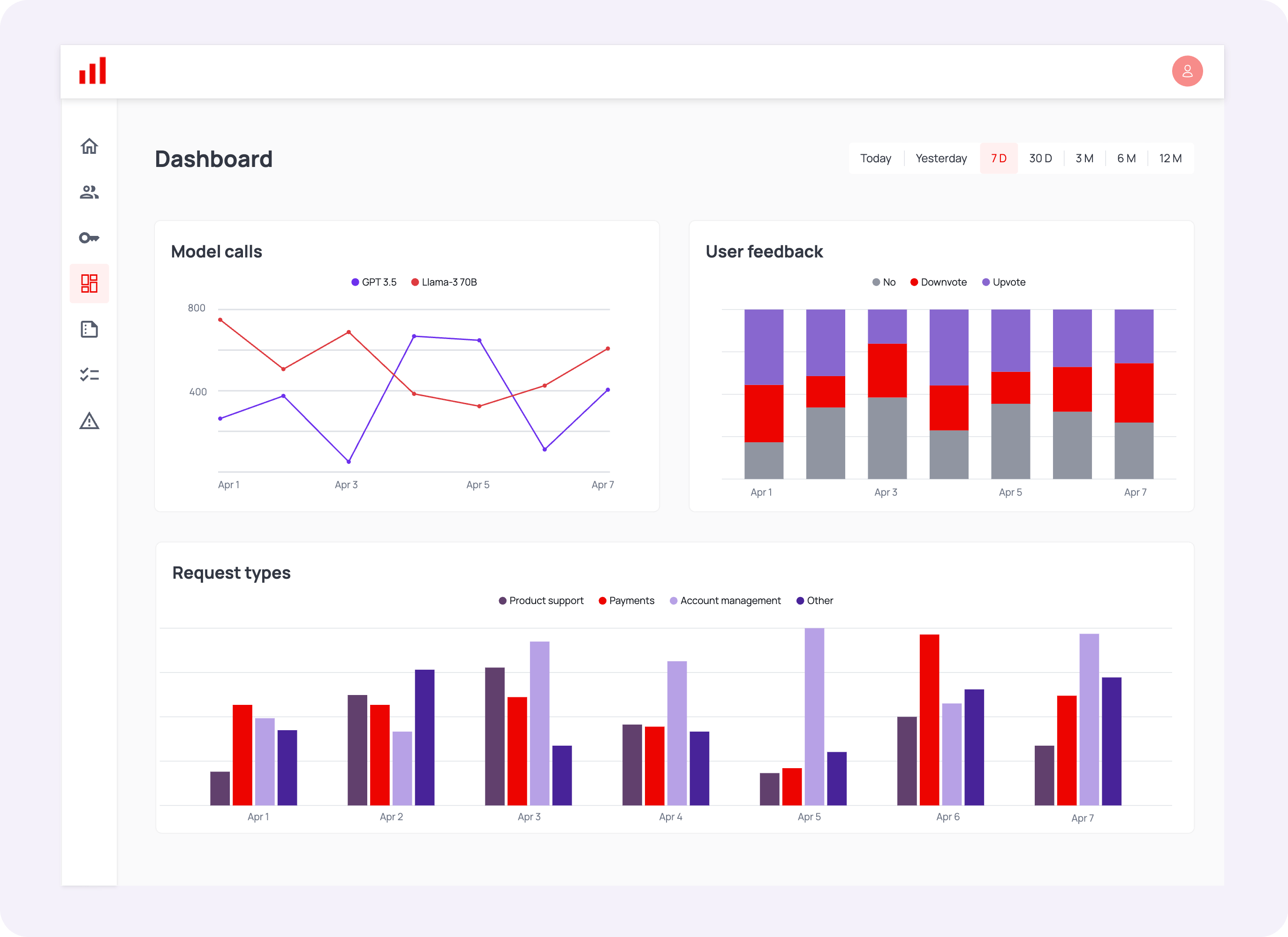

AI observability platform

Evaluate, test, and monitor your AI-powered products.

Get startedLLM and RAGs

ML models

Data pipelines

open source

Powered by the leading

open-source ML monitoring library

Our platform is built on top of Evidently, a trusted open-source ML monitoring tool.

With 100+ metrics readily available, it is transparent and easy to extend.

With 100+ metrics readily available, it is transparent and easy to extend.

Learn more

5500+

GitHub stars

25m+

Downloads

2500+

Community members

Components

AI quality toolkit from development to production

Start with ad hoc tests on sample data. Transition to monitoring once the AI product is live. All within one tool.

Evaluate

Get ready-made reports to compare models, segments, and datasets side-by-side.

Test

Run systematic checks to detect regressions, stress-test models, or validate during CI/CD.

Monitor

Monitor production data and run continuous testing. Get alerts with rich context.

GEN AI

LLM observability

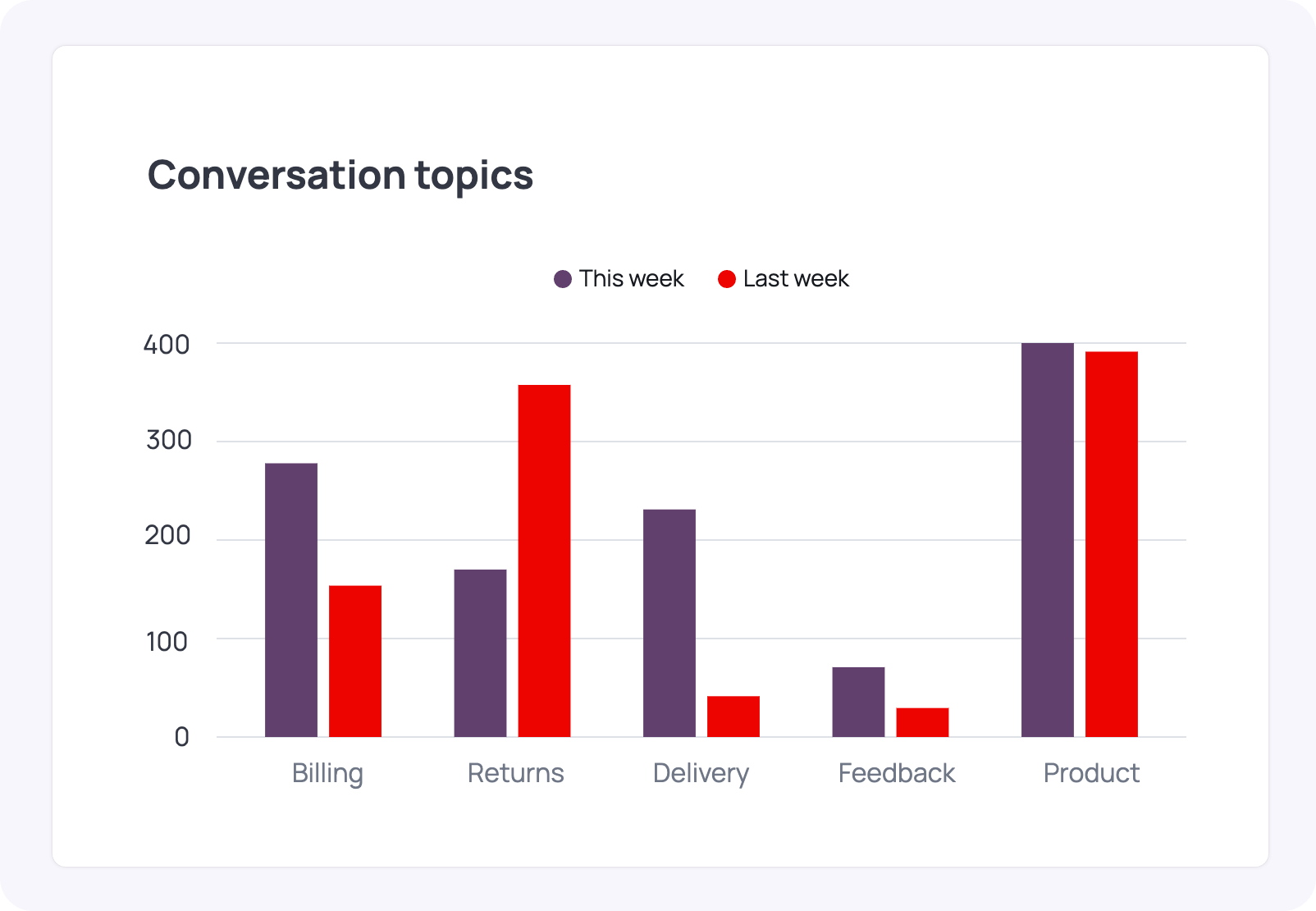

Gain visibility into any AI-powered applications, including chatbots, RAGs, and complex AI assistants.

Adherence to guidelines and format

Hallucinations and factuality

PII detection

Retrieval quality and context relevance

Sentiment, toxicity, tone, trigger words

Custom evals with any prompt, model, or rule

LLM EVALS

Track what matters to your AI product

Easily design your own AI quality system. Use the library of 100+ in-built metrics, or add custom ones. Combine rules, classifiers, and LLM-based evaluations.

Learn more

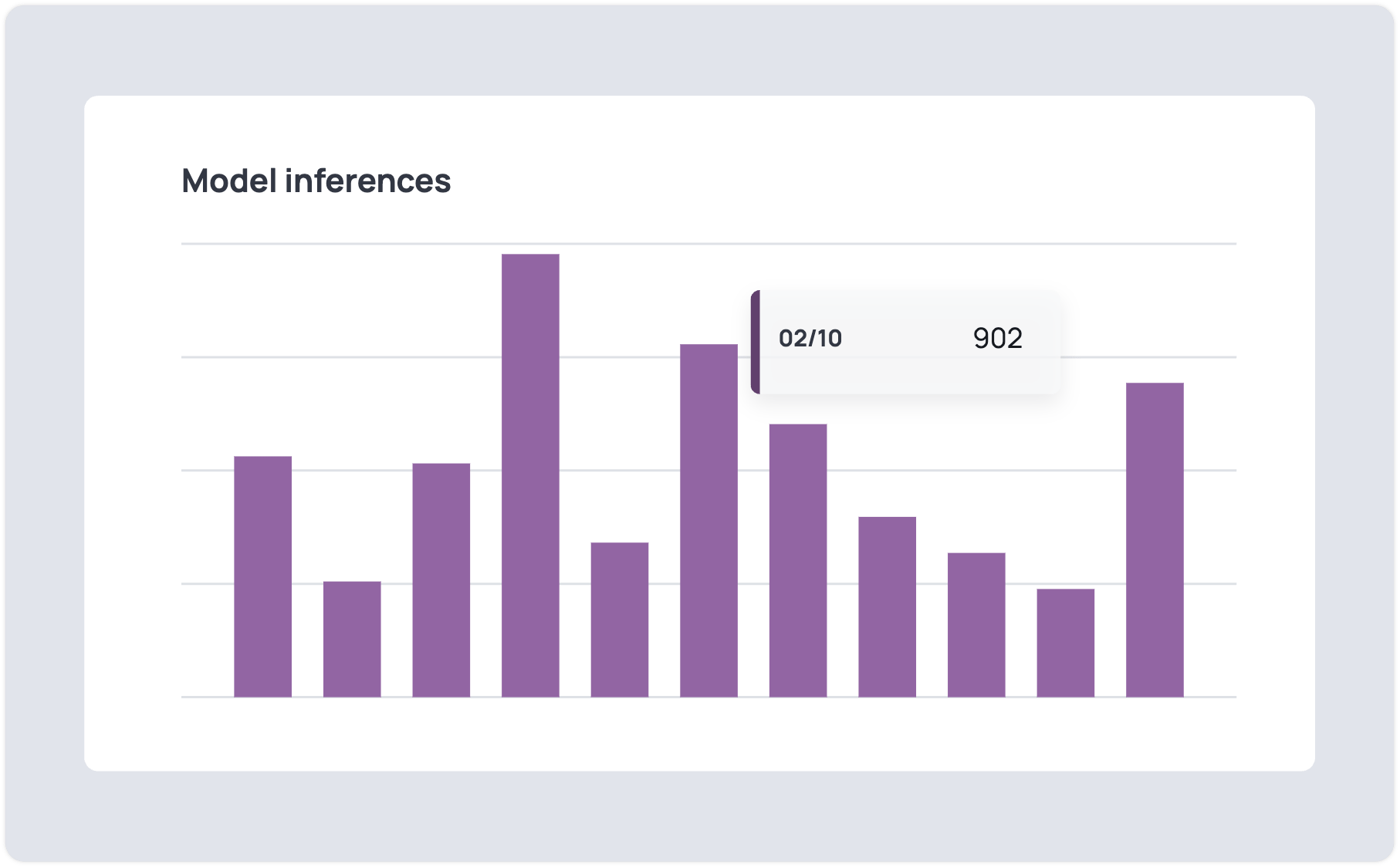

Predictive ML

ML observability

Evaluate input and output quality for predictive tasks, including classification, regression, ranking and recommendations.

Data drift

No model lasts forever. Detect shifts in model inputs and outputs to get ahead of issues.

Get early warnings on model decay without labeled data.

Understand changes in the environment and feature distributions over time.

Monitor for changes in text, tabular data and embeddings.

Learn more

Data quality

Great models run on great data. Stay on top of data quality across the ML lifecycle.

Automatically profile and visualize your datasets.

Spot nulls, duplicates, unexpected values and range violations in production pipelines.

Inspect and fix issues before they impact the model performance and downstream process.

Learn more

Model performance

Track model quality for classification, regression, ranking, recommender systems and more.

Get out-of-the-box performance overview with rich visuals. Grasp trends and catch deviations easily.

Ensure the models comply with your expectations when you deploy, retrain and update them.

Find the root cause of quality drops. Go beyond aggregates to see why model fails.

Learn more

testimonials

Loved by community

Evidently is used in 1000s of companies, from startups to enterprise.

Dayle Fernandes

MLOps Engineer, DeepL

"We use Evidently daily to test data quality and monitor production data drift. It takes away a lot of headache of building monitoring suites, so we can focus on how to react to monitoring results. Evidently is a very well-built and polished tool. It is like a Swiss army knife we use more often than expected."

Iaroslav Polianskii

Senior Data Scientist, Wise

Egor Kraev

Head of AI, Wise

"At Wise, Evidently proved to be a great solution for monitoring data distribution in our production environment and linking model performance metrics directly to training data. Its wide range of functionality, user-friendly visualization, and detailed documentation make Evidently a flexible and effective tool for our work. These features allow us to maintain robust model performance and make informed decisions about our machine learning systems."

Demetris Papadopoulos

Director of Engineering, Martech, Flo Health

"Evidently is a neat and easy to use product. My team built and owns the business' ML platform, and Evidently has been one of our choices for its composition. Our model performance monitoring module with Evidently at its core allows us to keep an eye on our productionized models and act early."

Moe Antar

Senior Data Engineer, PlushCare

"We use Evidently to continuously monitor our business-critical ML models at all stages of the ML lifecycle. It has become an invaluable tool, enabling us to flag model drift and data quality issues directly from our CI/CD and model monitoring DAGs. We can proactively address potential issues before they impact our end users."

Jonathan Bown

MLOps Engineer, Western Governors University

"The user experience of our MLOps platform has been greatly enhanced by integrating Evidently alongside MLflow. Evidently's preset tests and metrics expedited the provisioning of our infrastructure with the tools for monitoring models in production. Evidently enhanced the flexibility of our platform for data scientists to further customize tests, metrics, and reports to meet their unique requirements."

Niklas von Maltzahn

Head of Decision Science, JUMO

"Evidently is a first-of-its-kind monitoring tool that makes debugging machine learning models simple and interactive. It's really easy to get started!"

Dayle Fernandes

MLOps Engineer, DeepL

"We use Evidently daily to test data quality and monitor production data drift. It takes away a lot of headache of building monitoring suites, so we can focus on how to react to monitoring results. Evidently is a very well-built and polished tool. It is like a Swiss army knife we use more often than expected."

Iaroslav Polianskii

Senior Data Scientist, Wise

Egor Kraev

Head of AI, Wise

"At Wise, Evidently proved to be a great solution for monitoring data distribution in our production environment and linking model performance metrics directly to training data. Its wide range of functionality, user-friendly visualization, and detailed documentation make Evidently a flexible and effective tool for our work. These features allow us to maintain robust model performance and make informed decisions about our machine learning systems."

Demetris Papadopoulos

Director of Engineering, Martech, Flo Health

"Evidently is a neat and easy to use product. My team built and owns the business' ML platform, and Evidently has been one of our choices for its composition. Our model performance monitoring module with Evidently at its core allows us to keep an eye on our productionized models and act early."

Moe Antar

Senior Data Engineer, PlushCare

"We use Evidently to continuously monitor our business-critical ML models at all stages of the ML lifecycle. It has become an invaluable tool, enabling us to flag model drift and data quality issues directly from our CI/CD and model monitoring DAGs. We can proactively address potential issues before they impact our end users."

Jonathan Bown

MLOps Engineer, Western Governors University

"The user experience of our MLOps platform has been greatly enhanced by integrating Evidently alongside MLflow. Evidently's preset tests and metrics expedited the provisioning of our infrastructure with the tools for monitoring models in production. Evidently enhanced the flexibility of our platform for data scientists to further customize tests, metrics, and reports to meet their unique requirements."

Niklas von Maltzahn

Head of Decision Science, JUMO

"Evidently is a first-of-its-kind monitoring tool that makes debugging machine learning models simple and interactive. It's really easy to get started!"

Evan Lutins

Machine Learning Engineer, Realtor.com

"At Realtor.com, we implemented a production-level feature drift pipeline with Evidently. This allows us detect anomalies, missing values, newly introduced categorical values, or other oddities in upstream data sources that we do not want to be fed into our models. Evidently's intuitive interface and thorough documentation allowed us to iterate and roll out a drift pipeline rather quickly."

Ming-Ju Valentine Lin

ML Infrastructure Engineer, Plaid

"We use Evidently for continuous model monitoring, comparing daily inference logs to corresponding days from the previous week and against initial training data. This practice prevents score drifts across minor versions and ensures our models remain fresh and relevant. Evidently’s comprehensive suite of tests has proven invaluable, greatly improving our model reliability and operational efficiency."

Javier López Peña

Data Science Manager, Wayflyer

"Evidently is a fantastic tool! We find it incredibly useful to run the data quality reports during EDA and identify features that might be unstable or require further engineering. The Evidently reports are a substantial component of our Model Cards as well. We are now expanding to production monitoring."

Ben Wilson

Principal RSA, Databricks

"Check out Evidently: I haven't seen a more promising model drift detection framework released to open-source yet!"

Evan Lutins

Machine Learning Engineer, Realtor.com

"At Realtor.com, we implemented a production-level feature drift pipeline with Evidently. This allows us detect anomalies, missing values, newly introduced categorical values, or other oddities in upstream data sources that we do not want to be fed into our models. Evidently's intuitive interface and thorough documentation allowed us to iterate and roll out a drift pipeline rather quickly."

Ming-Ju Valentine Lin

ML Infrastructure Engineer, Plaid

"We use Evidently for continuous model monitoring, comparing daily inference logs to corresponding days from the previous week and against initial training data. This practice prevents score drifts across minor versions and ensures our models remain fresh and relevant. Evidently’s comprehensive suite of tests has proven invaluable, greatly improving our model reliability and operational efficiency."

Ben Wilson

Principal RSA, Databricks

"Check out Evidently: I haven't seen a more promising model drift detection framework released to open-source yet!"

Javier López Peña

Data Science Manager, Wayflyer

"Evidently is a fantastic tool! We find it incredibly useful to run the data quality reports during EDA and identify features that might be unstable or require further engineering. The Evidently reports are a substantial component of our Model Cards as well. We are now expanding to production monitoring."

Join 2500+ ML and AI engineers

Get support, contribute, and chat about AI products.

Collaboration

Built for teams

Bring engineers, product managers, and domain experts to collaborate on AI quality.

UI or API? You choose. You can run all checks programmatically or using the web interface.

Easily share evaluation results to communicate progress and show examples.

Get started

Scale

Ready for enterprise

With an open architecture, Evidently fits into existing environments and helps adopt AI safely and securely.

Private cloud deployment in a region of choice

Role-based access control

Dedicated support and onboarding

Support for multiple organizations

Get Started with AI Observability

Book a personalized 1:1 demo with our team or sign up for a free account.

No credit card required

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.jpg)